When the Machine Consumes Itself

Artificial intelligence promises to liberate us—but what from, and what for?

Welcome to Ever Not Quite, a newsletter about technology and humanism. In this installment, I’m thinking about what are said to be some of the implications of AI for the deeply overlapping fields of education and labor. Education, of course, has always had some connection to the future role that students are expected to play in society and in the economy. But since the industrial revolution, labor has often taken narrow and dehumanizing forms that call upon only a small set of a worker’s human capacities in its promotion of an explosion of widespread material abundance. Meanwhile, education has been shaped to support the needs of the (post)industrial economy—and now often defines itself exclusively in terms of that purpose. But, if the techno-optimists are to be believed, all this is about to change as AI assumes the narrowest tasks required by the modern economy—above all those that chiefly involve the manipulation of symbols—and renders obsolete the need for the young to be educated to perform them.

I try to imagine what it might mean to take seriously some of the more utopian visions for the future of AI—those in which much of what is now a human burden has been graciously taken over by machines. The image that comes to mind is one in which key features of the techno-industrial system that has created artificial intelligence in the first place is itself consumed by AI as it takes over more and more of the systems out of which it was developed. Whether or not this future comes to pass, we are left to wonder—with historically unprecedented urgency—what it would mean for the human workers who have been diminished by the conditions of modern labor to be liberated from its demands when the machine consumes itself.

I hope you find it interesting, and thank you for reading!

It has been suggested that the emerging competencies of artificial intelligence, as it becomes capable of doing more and more of what human beings do, has seemed to track our own evolutionary history, only in reverse: At the moment, it is said, AI excels in the rational and analytical work which, when it is performed by humans, springs from the more recently-evolved parts of the brain. Moreover, these areas are relatively late to become fully developed, and are employed particularly by white-collar workers who have spent the first decades of their lives learning how to manipulate symbols (i.e., numbers and letters) in what are considered to be the correct ways. Whether or not there is anything to this observation, it’s certainly true that there is something forced about the development of numeracy and literacy in children, although many of us who have been out of primary school for some time easily forget how hard-won these skills really are. It’s also clear that, despite its mastery over symbols, artificial intelligence still has the furthest to go in mimicking our most ancient, pre-rational cognitive functions which are the first to develop in the young and are responsible for orienting us in the physical and social world in ways attributable to what we might call a form of “common sense”.

What artificial intelligence still lacks (although the research is, as they say, “ongoing”) is the visceral amalgam of nerves, reflexes, and emotions that constitutes the holistic experience of being-in-the-world—the Lebenswelt, or lifeworld, of the phenomenological tradition.1 That is to say, the primary, pre-symbolic contact with the world that even the simplest organisms have in one way or another still eludes the capabilities of the most advanced artificial systems. For now, AI is eerily competent at generating the spreadsheets and text documents that have traditionally been produced by human beings whose capacities for analytical thinking have been honed through many years of schooling, but because it has no orientation in the world, it remains ill-equipped for the many embodied and social activities which come naturally to children and require no formal education at all. Despite this, we perceive these systems as “intelligent” precisely to the degree that we have already defined intelligence in narrow terms that are chiefly demonstrated by humans through the manipulation of symbols.

I sometimes wonder to what extent the historically recent tendency to view formal—and especially higher—education merely as job-training, its rationale reduced to preparing students to find their place in one of the narrow but highly-trained occupations that constitute so much modern labor, is just a perverse extension of the traditional expectation that primary schools teach the narrowly formalistic skills of symbol-manipulation. We are accustomed by now to hearing schools, colleges and universities make the case for themselves on primarily economic grounds: going to school, they say, will get you a job in the modern economy. But one of the warnings we frequently encounter amidst the much larger story of AI’s recent salience is the prediction that in the very near future, and certainly before we are fully prepared, many people who thought they were investing in their own marketability for employment—say, by learning to code or becoming proficient in office software—will discover that, through no fault of their own, the time and money spent on their schooling was not the guarantor of lifelong job-security they had hoped it was. To the extent that this prediction is borne out, it stands to reason that the emergence of an artificial “intelligence” designed to excel in precisely the areas that have become the focus of schooling will call into question the need for anyone to become educated in this distinctly modern sense.

This account is what I have taken to calling the “revenge of the liberal arts”: education whose foremost goal is to prepare students to find employment in a postindustrial economy loses much of its rationale in a world where the economic machine runs largely autonomously by means of artificial intelligence with minimal human oversight. By contrast, liberal arts education—which concerns itself with developing the open-ended capacities of its students not by virtue of their status as future job-holders, nor even as purely rational beings, but of their very humanity—steps forward to recommend itself as the appropriate vocation for those who have been liberated from economic necessity. Indeed, this is precisely the sense in which the liberal arts were traditionally understood to be “liberal” in the first place: they represent the intellectual pursuits worthy of a person who has been freed from the servile demands of labor, regardless of how that freedom has been secured. In the ancient world where the idea of the “artes liberales” originated, it was understood that the freedom from having to perform labor was made possible by offloading that labor onto slaves. In its contemporary permutation, AI is poised to take over the social and economic role that slaves once held, except the promised liberation will be much more egalitarian in its distribution.

But there is another account of the near-term disruptions of artificial intelligence, one that picks up the same stick from the opposite end, surveying the possibilities of a potential AI takeover of large portions of the economy not through the lens of education, but of labor. In a powerful essay from this past summer,

observed just how well-suited artificial intelligence is to replace the people who work within postindustrial systems whose imperative is to maximize efficiency and output. Sacasas writes:The claim or fear that AI will displace human beings becomes plausible to the degree that we have already been complicit in a deep deskilling that has unfolded over the last few generations. Or, to put it another way, it is easier to imagine that we are replaceable when we have already outsourced many of our core human competencies.

And what’s more, because we have grown accustomed to the narrow and dehumanizing nature of much modern labor, it is only natural that we would not just tolerate its automation, but relish it:

If a job, a task, a role, or an activity becomes so thoroughly mechanical or bureaucratic, for the sake of efficiency and scale, say, that it is stripped of all human initiative, thought, judgment, and, consequently, responsibility, then of course, of course we will welcome and celebrate its automation.

Sacasas’ argument does not suggest that mechanical, dehumanizing labor (and, soon enough, even more sophisticated forms of work) is not replaceable by AI, nor even necessarily that it should not be discarded in some way that is humane and respectful to those who currently perform it; it simply observes that what makes the substitution possible in principle is the fact that we find ourselves on the receiving end of a process of industrialization that has insisted on the efficiency and output of work at the expense of its dignity and its engagement of the full breadth of our capabilities.

Of course, people living in the modern world possess a material prosperity made uniquely possible by elaborate economic and government systems and services. But the unseen price of this affluence, it seems, is that all this is the byproduct of a worldview that substitutes a narrow set of metrics—those that were already particularly well-suited to measurement and optimization—for a broader set of human values that do not admit so easily of technical mastery. As a result, we’ve allowed more substantive evaluations of human well-being—those not always advanced by the abundance that modern capitalism does in fact provide—to atrophy. In other words, as has frequently been argued, the process by which, particularly since the last century, we have become materially wealthy by the standards of any prior generation is one and the same with the process by which our culture has come mistakenly to identify material abundance with individual flourishing and cultural maturity. This is a longstanding line of cultural criticism that I needn’t rehearse in any detail here, however necessary it may be to remind ourselves of it now and again.

From an optimistic perspective, the promise of artificial intelligence is that it seems to offer an opportunity to reverse some of the dehumanizing assumptions that have shaped modern labor and education. But we should not lose sight of the fact that it proposes to do so by means of the same techno-rationalistic logic that forged and encouraged these assumptions in the first place. As Sacasas goes on to argue, “the machine will liberate us only to the degree that it swallows itself, that it swallows up all of what the machine, in its longstanding technological, economic, and institutional forms, had required and demanded of us.” That is to say, we can regard the changes that artificial intelligence will bring as liberatory and ennobling only to the extent that they ultimately undermine the very ways of thinking that are presently bringing AI itself into the world: in short, it must consume the various systems that require us to view ourselves as machines, but spare every jot of the humanity that was ours to begin with, and which this transformation has promised to restore.

Sacasas connects this point to the well-known words of Jesus in the synoptic gospels: “Render therefore to Caesar the things that are Caesar’s, and to God the things that are God’s”. He concludes:

What would it mean to render to the machine, what is the machine’s in precisely this spirit? To regain a sense of what it is to be a person, coupled with a subversive practice of the same, within a techno-economic system whose default settings incline us to forget this vital fact about ourselves and our neighbors? To reclaim a confidence in what we might be able to do ourselves and for one another in the face of an array of technologies, services, and institutions that market themselves under the implicit sign of our ostensible helplessness and the banner of a debilitating liberation? Let the machine have everything that is stamped with its spirit. Let us keep everything else.

One thing I like about this attitude is that it entertains the possibility of a receptive stance towards the promises of artificial intelligence, but without forfeiting the critical perspective which we must assume if we can look to a future in which AI has replaced any portion of human labor with anything approximating optimism. That is to say, we must insist that the machine consumes only itself and nothing else; insofar as the use of AI makes us less capable rather than more, diminished rather than enlarged, or more restricted rather than freer, it will have failed to deliver us a gift in any form that we can accept.

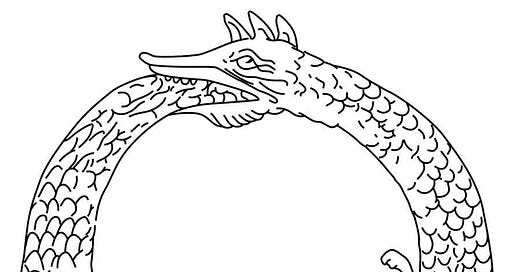

The image that comes to my mind is the ouroboros—a serpent circularly swallowing its own tail—which has appeared in many contexts and traditions around the world since time immemorial, and which has been understood in diverse ways. But it seems to me that it can be imagined to portray the consumption of the techno-industrial system by means of its own head—the vanguard of its progress—which swallows up the narrow and confining assumptions and practices that created it in the first place. Or, less metaphorically, it is the picture of artificial intelligence assuming—and thereby abolishing—the inhuman roles that humans have until now filled, and liberating us to pursue forms of life that are more elevating and befitting of our nature. But insofar as it represents the system’s self-cannibalism, the figure also suggests the futility of any hope that it will succeed: just as the serpent will never ultimately disappear by swallowing itself, it makes little sense to expect that the technology of artificial intelligence can fully reclaim all that bears the stamp of its image. In fact, we are less likely to see AI automation reverse the processes that set it into motion than we are to discover their further entrenchment in new and unforeseeable ways.

Leaving aside the sheer unlikeliness of the most optimistic outcomes, we must not fail to notice that the liberation they typically envision is an astonishingly paltry picture of what human life might look like under allegedly utopian conditions—a picture that rests implicitly on the assumption that labor is wholly alien to the core of what it is to be human. It is a vision that encourages us to think that we will be better off when, in the parlance of our times, the sphere of “work” contracts to the vanishing point and “life” expands into an endless vacation consisting of maximal consumption of unlimited products and experiences. We are goaded by the promise that we will be “free to create art”, nevermind the fact that the meaning and significance of human-crafted images, sounds, and words will also have been irrevocably transformed and surpassed in every technical dimension by the same technology that will have made our newfound liberation possible.

We are being called by technological realities to ask a set of connected questions that have been asked often in many contexts and for different reasons, but which have seldom been elicited by so urgent a provocation: What would it mean to be freed from the constraints of a postindustrial economy, but without forfeiting the material prosperity which it makes possible? What would education look like if it was completely decoupled from the demands of a labor market? What is true freedom, and how are the young to be educated to realize it? Whether or not we ever share the world with what we are coming to call, perhaps less and less metaphorically, “intelligent machines”, these are questions which must not only be asked by every individual, but which must continuously animate the discourse of any self-examining society that finds itself, as ours now does, with this technology at its fingertips. This moment calls for more and clearer thinking about the meaning of genuine freedom not because the possibilities of AI automation will actually give it to us, but because of what it can reveal about what is and always will be true, so long as human beings—in anything resembling the form we know—are alive to learn and to labor.

I should acknowledge that this description does some injustice to phenomenology by seeming to place the physical/anatomical conditions for experience in a place of theoretical primacy, implicitly pushing the lifeworld itself into a secondary position. It might be pointed out that this framing completely misses the fundamental phenomenological insight.

I also read the Sacasas article you quoted and wonder if the same questions apply to technology in general and not just AI? I personally think AI is something of a marketing scam. It’s more of a rebranding of technology to get something more from us. There has always been the narrative that “technology is a tool” > “humans are evolved to use tools” > “therefore technology is inevitable”. I suspect this is all oversimplified on purpose. Technology may just be a useful construct to gain our compliance. Otherwise, we wouldn’t be threatened with AI, it would only be used in the service of human good. I think humans are way too susceptible to narratives and those in power use that against us.